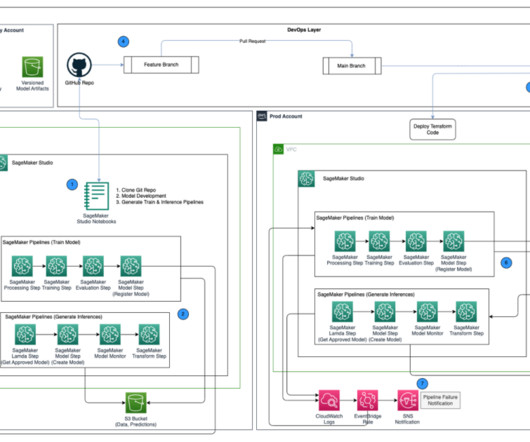

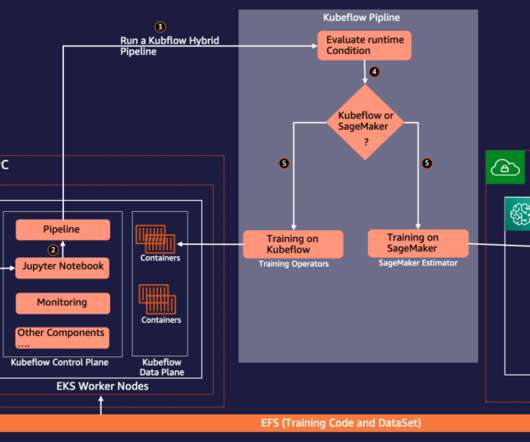

Promote pipelines in a multi-environment setup using Amazon SageMaker Model Registry, HashiCorp Terraform, GitHub, and Jenkins CI/CD

AWS Machine Learning

NOVEMBER 9, 2023

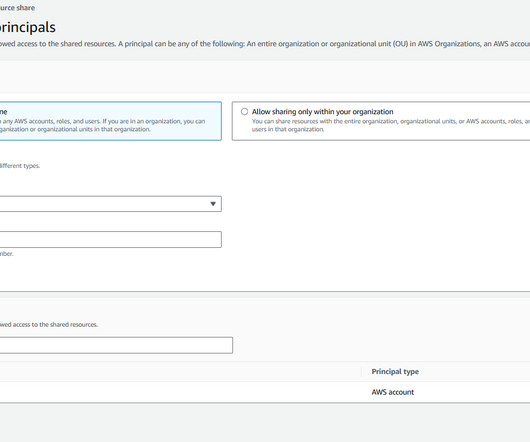

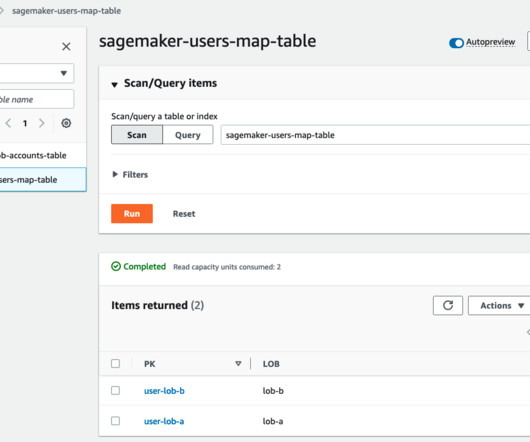

Prod environment – Where the ML pipelines from dev are promoted to as a first step, and scheduled and monitored over time. Central model registry – Amazon SageMaker Model Registry is set up in a separate AWS account to track model versions generated across the dev and prod environments.

Let's personalize your content