AWS at NVIDIA GTC 2024: Accelerate innovation with generative AI on AWS

AWS Machine Learning

APRIL 11, 2024

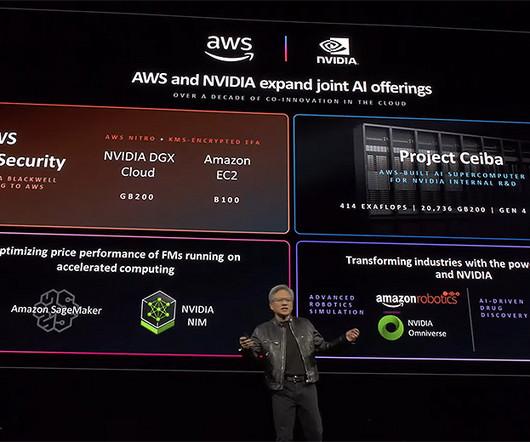

AWS was delighted to present to and connect with over 18,000 in-person and 267,000 virtual attendees at NVIDIA GTC, a global artificial intelligence (AI) conference that took place March 2024 in San Jose, California, returning to a hybrid, in-person experience for the first time since 2019.

Let's personalize your content